Home What is machine learning? Types, practical applications and how does it work

Machine learning is the application of statistical, mathematical and numerical techniques to gain knowledge from data. These insights can lead to summaries, visualisation, clustering or even predictive and prescriptive value on datasets.

Regardless of the beliefs that surround it, it is important to highlight that machine learning is not a replacement for analytical thinking and critical work in data science, but rather a supplement to support more responsive decision-making.

Furthermore, machine learning is also characterised as a set of diverse fields and a collection of tools that can be applied to a specific subset of data to address a problem and provide a solution to it.

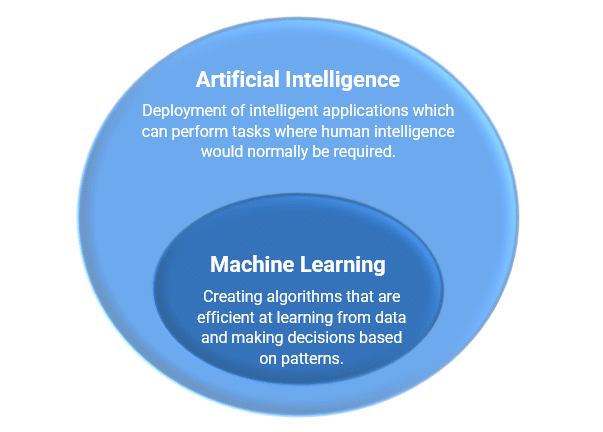

Machine learning and artificial intelligence, as well as the terms data mining, deep learning, and statistical learning are related.

Artificial intelligence is the ability of a computer system to emulate human cognitive functions including thinking, learning and problem-solving. Using AI, a computer system applies logic and mathematics to imitate the same reasoning that people use to make decisions and learn from new information.

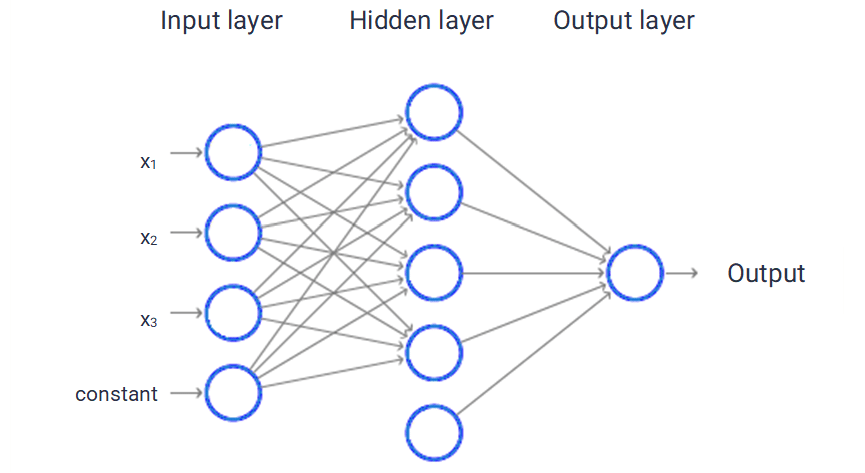

After considering machine learning as the process of using mathematical models of data to help a computer learn without direct instructions, allowing a computer system to continue to learn and improve itself based on experience, machine learning can therefore be understood as an application of AI.

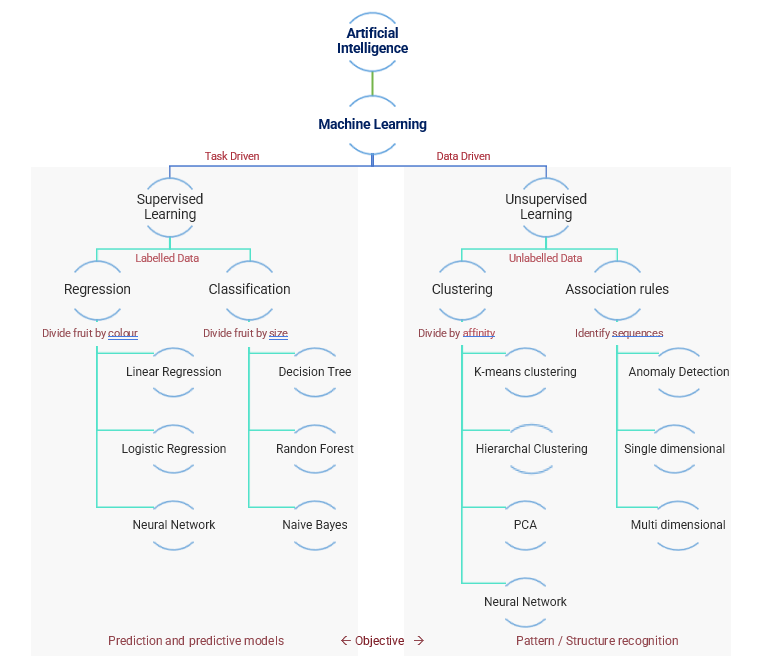

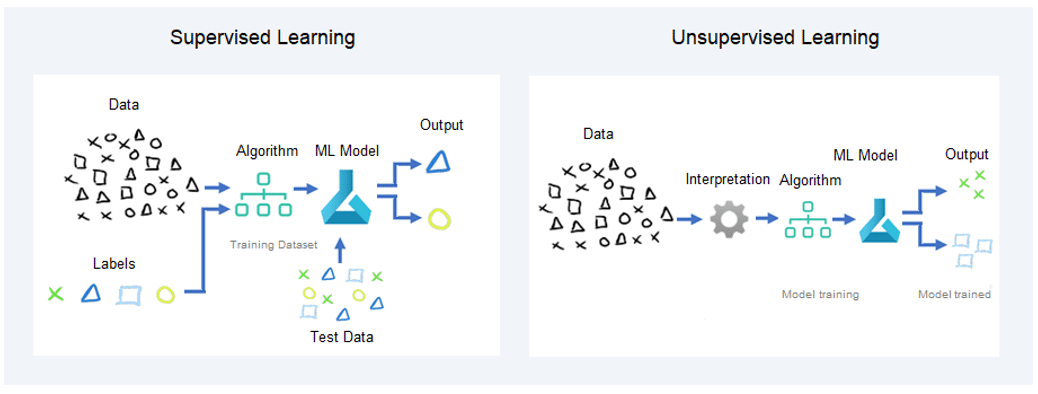

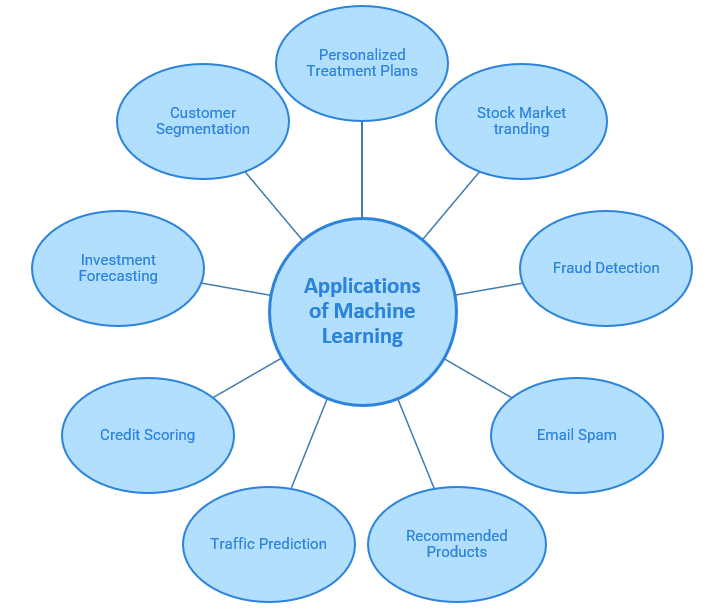

Machine learning refers to a set of techniques used commonly for solving a range of real-world problems with the aid of computational systems which have been programmed to learn how to solve a problem rather than being explicitly programmed to do so. Overall, we can distinguish between supervised and unsupervised machine learning.

The main differences we can distinguish between these learning models are the following:

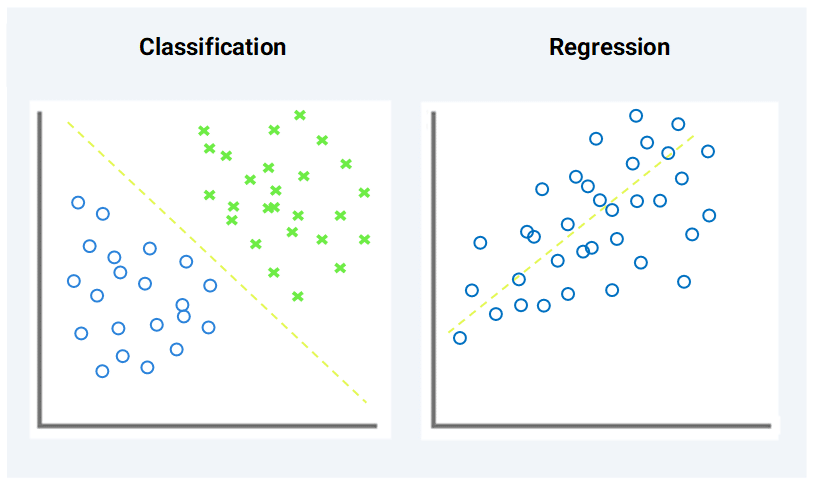

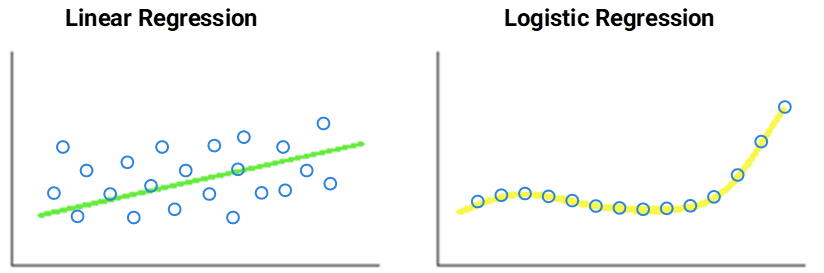

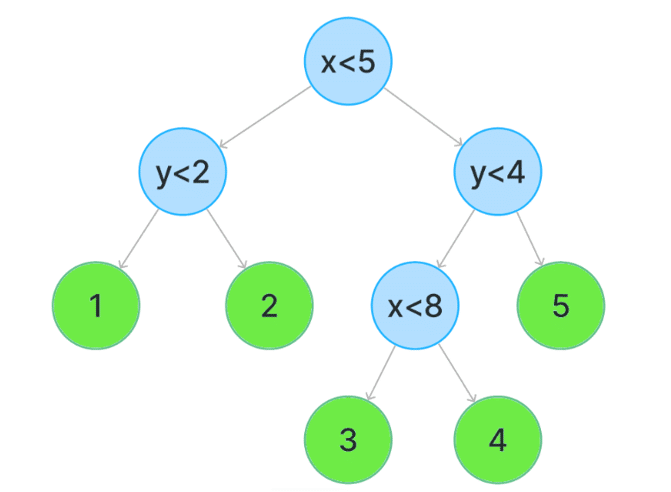

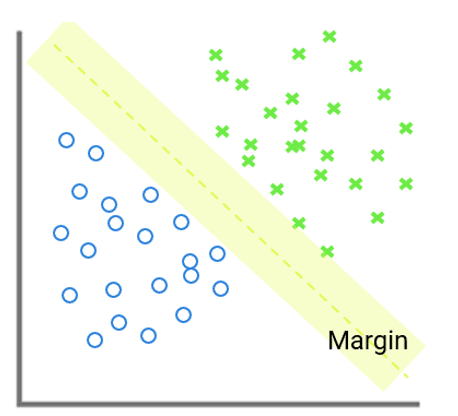

Supervised learning can be separated into two types of problems when data mining—classification and regression:

Regression algorithms: Refers to a model that predicts continuous (numerical) values, in contrast to classification, which mainly classifies data.

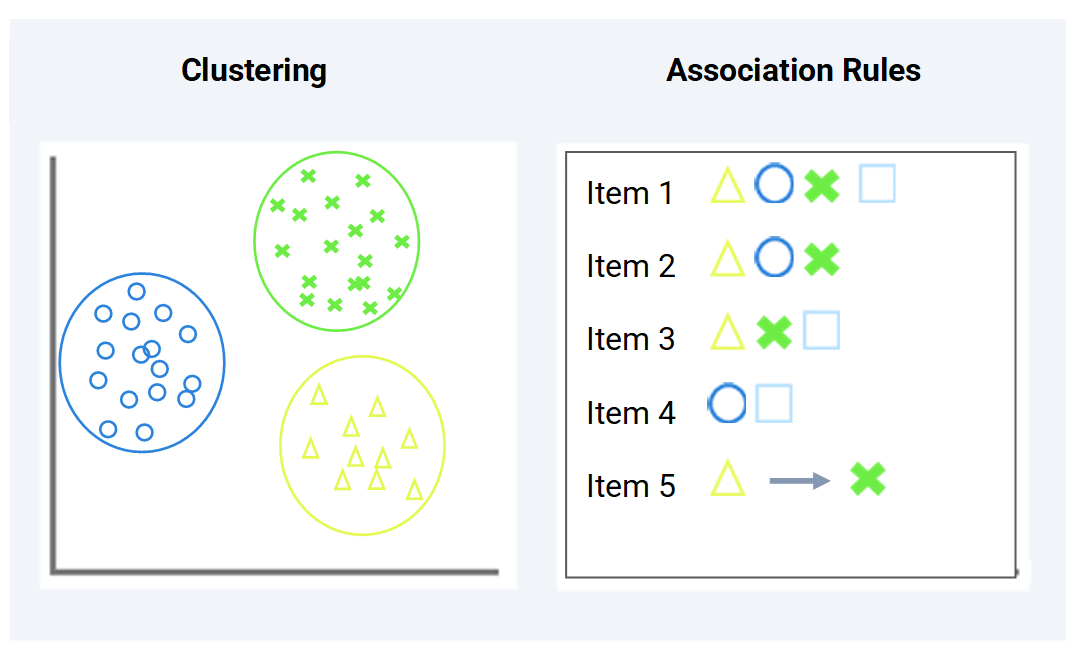

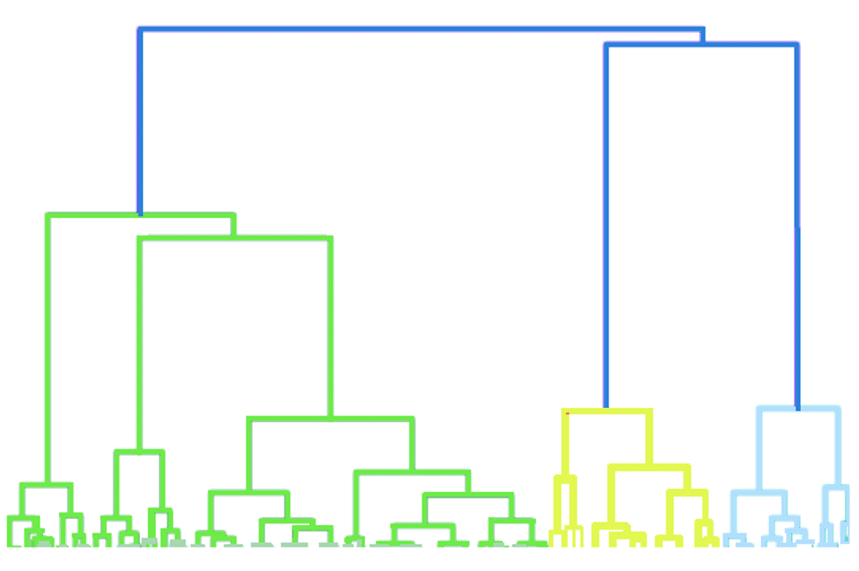

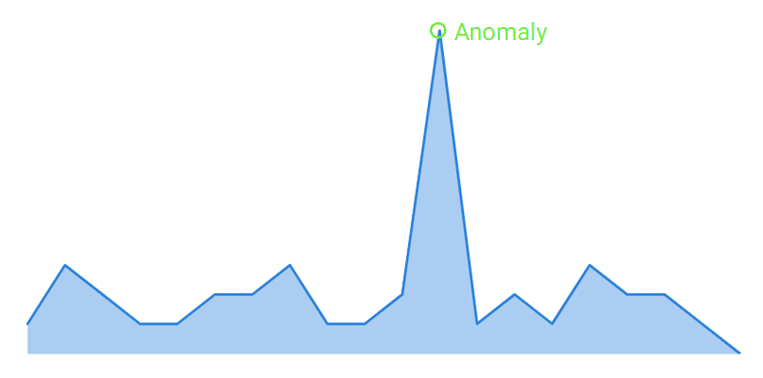

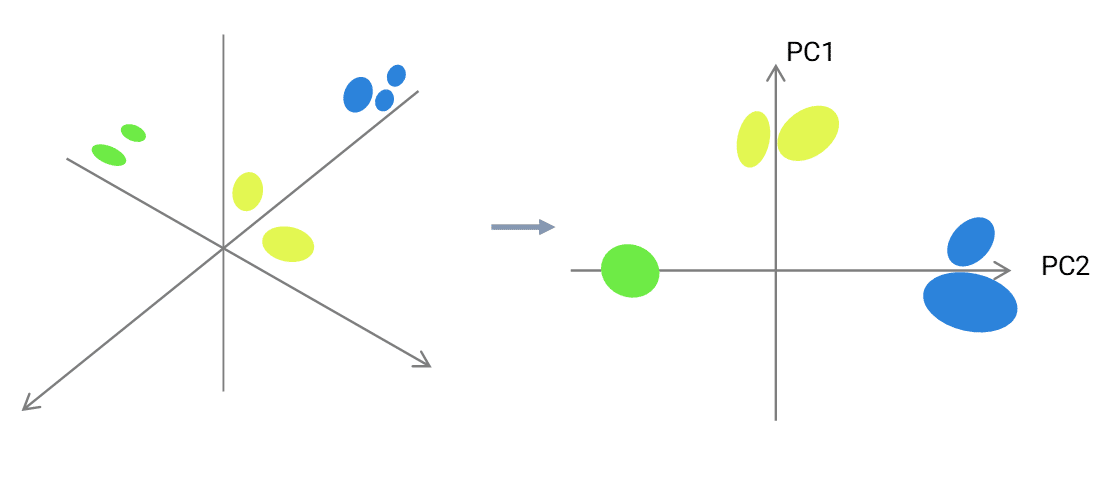

The unsupervised learning algorithm can be further categorised into two types of problems:

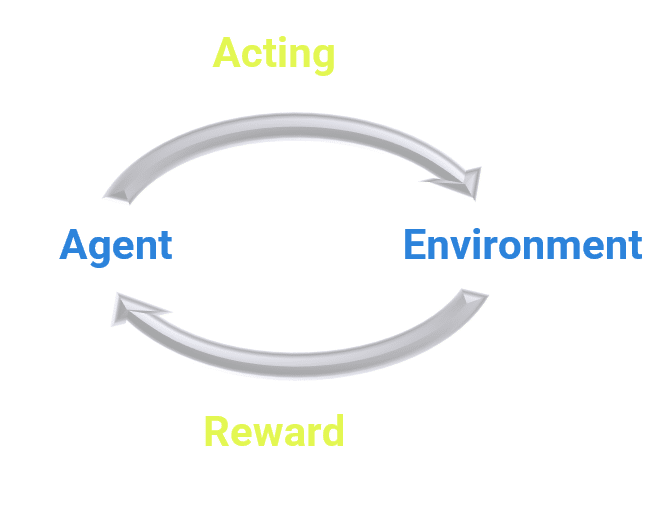

Reinforcement learning is an area of machine learning. Its concern is to take appropriate actions to optimise the reward in each situation. Many computer programmes and machines apply it to find the optimal performance or path to follow in a specific situation.

Reinforcement learning contrasts with supervised learning where the training data contains the key to the response, and the model is trained with the right answer, while in reinforcement learning there is no feedback, but the reinforcement agent chooses what to do in order to perform the task.

Depending on the situation we are dealing with, we will have to choose between one method or another. For example, if we want to determine the number of phenotypes in a population, organise financial data or identify similar individuals from DNA sequences, we can work with clustering.

On the other hand, if the problem is about predicting whether a particular email is spam or predicting whether a manufactured product is defective, we are trying to identify the most appropriate class for an input data point, so using a classification algorithm would be most appropriate.

Other situations that can be tackled are predicting house prices, estimating life expectancy, or inferring causality between variables that are not directly observable or measurable, in which case regression would be the most convenient algorithm to work with.

We also recommend this blog on machine learning for data analysis to find out how it can work closely with apps like Power BI to enhance any company’s operations. Seeing business analytics and data science working together can be truly fascinating.

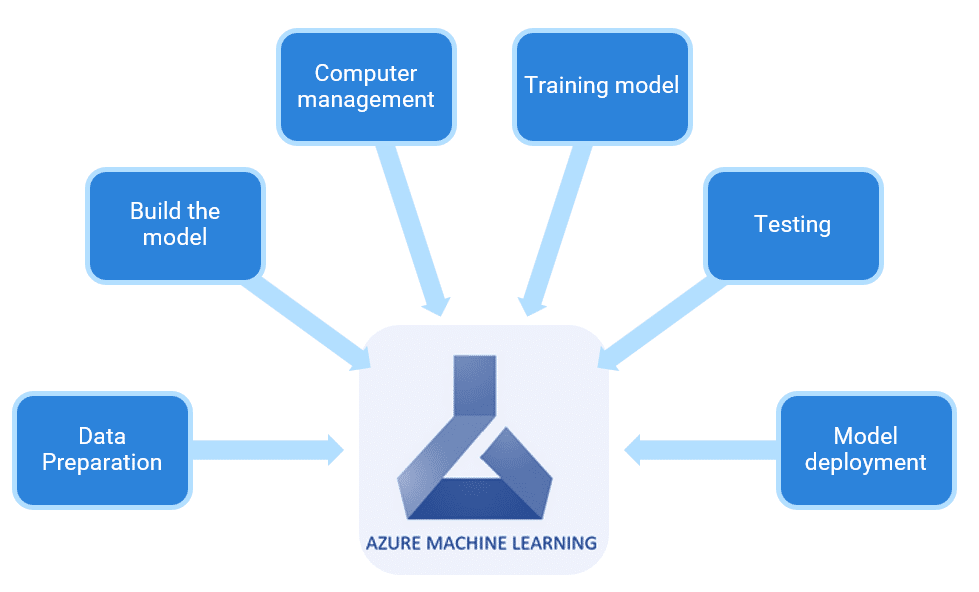

Azure Machine Learning is a cloud service for automating and managing the entire lifecycle of machine learning (ML) projects. This service can be used in your daily workflows to train and deploy models and manage machine learning operations (MLOps).

Azure Machine Learning has tools that enable you to:

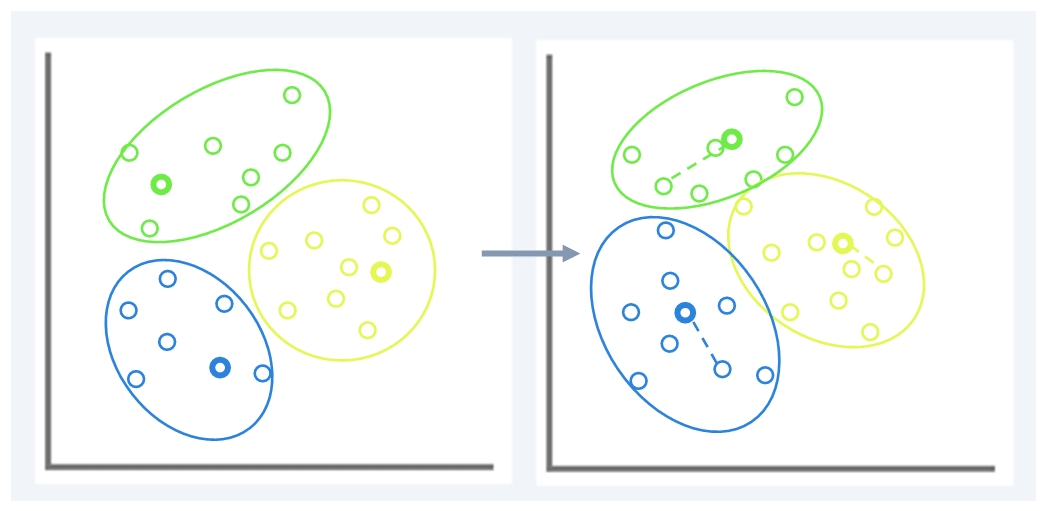

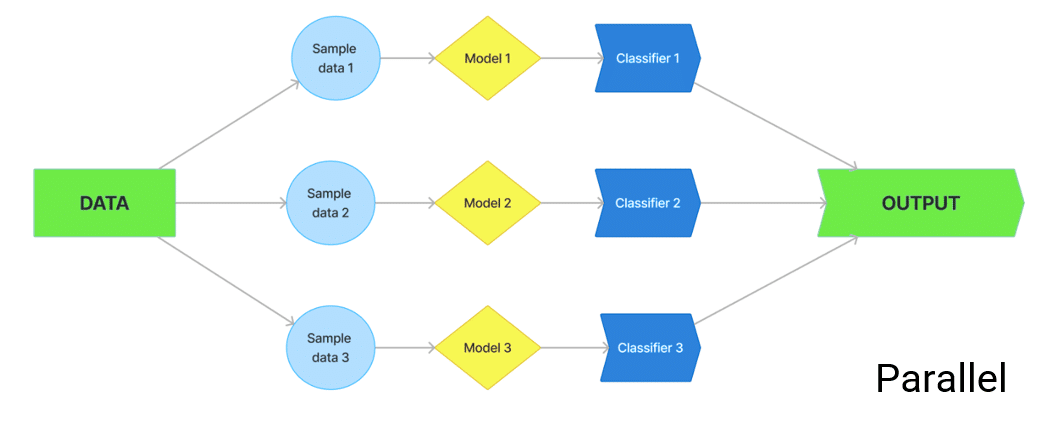

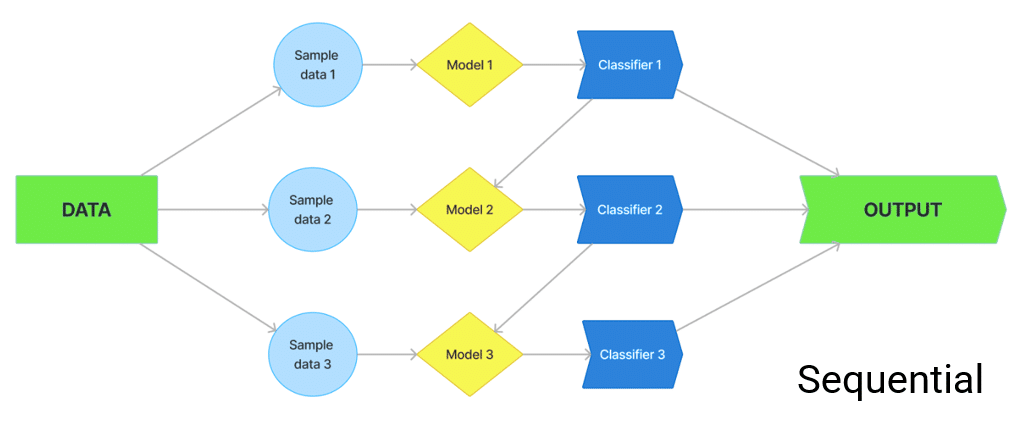

Different ensemble strategies have been developed over time, which results in a higher generalisability of learning models. Ensemble techniques work to improve prediction accuracy compared to a single classifier on the dataset. The two most popular ensemble techniques are Bagging and Boosting which are used to combine the results obtained by the machine learning methods.

The bagging method is implemented to reduce the estimated variance of the classifier. The goal of the bagging ensemble method is to separate the dataset into several randomly selected subsets for training with substitution. These subsets of data are then trained using the same method used. The results obtained by each subset of data are then averaged, which provides better results compared to a single classifier.

Boosting is an ensemble modelling method which tries to develop a robust classifier from the existing number of weak classifiers. To do this, a model is modelled using weak models in series. The development of this model is based on building each model by solving the errors of the previous model. This is done until either a proper prediction is established, or the maximum number of models is aggregated.

Though there is much more to the field of machine learning this was an approximation to the basics of it, as well as a quick look into some of its practical applications.

Elastacloud

11 Toynbee Street, E1 7NE

Phone: +44 20 7859 4852

Email: info@elastacloud.com

Latest Whitepapers

Careers at Elastacloud

About Elastacloud

Learning Archives